Intro

Artificial Intelligence (AI) is “the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings” (Copeland). In other words, AI is a computer program that is able to emulate anything a human is capable of doing.

Today, everyone’s heard of artificial intelligence. We understand the concept, and we know that AI is already very real in the modern world. But what most don’t realize is that humans have already been conceptualizing AI for nearly a century. The boom is happening now because technology and computing has finally reached a point that makes AI feasible.

Yes, AI has become a reality today, but what will happen tomorrow? Or in a year? Or in 10 years? A whole lot can, and probably will change in the near future — both in the technology that drives AI, as well as the use cases for AI.

The Turing Test

English computer scientist and mathematician Alan Turing was among the first to really ponder what the future of AI would look like. In 1950, Turing published a famous paper titled “Computing Machinery and Intelligence,” which presented, for the first time, the Turing Test. The Turing Test is a test to evaluate whether or not a machine could act, speak, or behave in a manner indistinguishable from that of a human being. The test is evaluated based on a conversation between a person and a machine, over a digital chatroom. If the person is not able to identify the machine as a machine, the machine would then pass the test. Even today, the Turing Test remains a standard for what all artificial intelligences strive to become. For the 1950s, the test was a breakthrough in thinking about artificial intelligence; people started to anticipate a future where AI could perform at the same level as humans.

The Influence of Hollywood

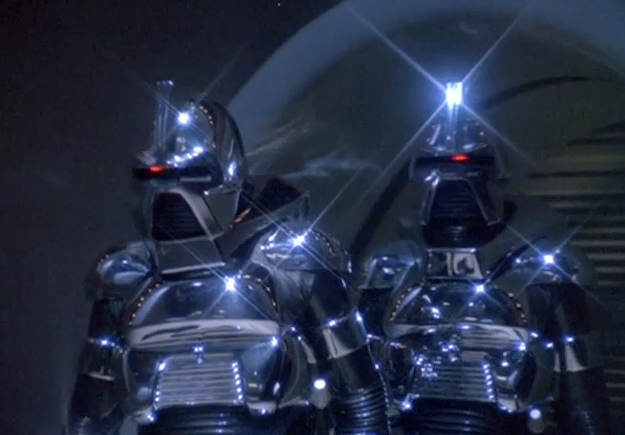

Courtesy of Battlestar Galactica Critical Commons

Sci-fi TV series “Battlestar Galactica” introduced Cyclons, robot warriors that could act on their own accord. These robots were among the first appearances of AI robots in media. The same effect took place on a larger scale with the the release of The Terminator in 1984. Everyone’s heard of the famous sci-fi classic. For its time, the movie gave the common human a glimpse into what an AI dominated future would look like. These Hollywood productions got people thinking about what would come, and more importantly, presented the idea that a completely artificial robot could look and act just like humans. Hollywood made AI a mainstream idea that stuck with the masses, despite many’s doubts about whether such technology was even possible.

The AI Winters

Between 1970-1990, there was a huge decrease in the overall excitement surrounding AI. This resulted in reduced funding into AI research, as well as less companies pursuing AI projects. From the inception of the Turing Test up to the 1970s, there was an extreme amount of speculation and excitement behind artificial intelligence. Similar to what happened with the dot com bubble, the AI winter caused the field to crash after the high expectations for the technology were not met.

Breakthroughs

Despite the decline of AI as a result of the AI winters, development still continued, but at a much slower pace than before. Eventually, breakthroughs in AI development revitalized the industry and propelled it to where it is today.

IBM Deep Blue: Much excitement was restored to the field after IBM’s Deep Blue AI beat world chess champion and grandmaster, Garry Kasparov (Courtesy of PRI), in a series of 6 games. At the time, the algorithm behind Deep Blue was actually a computational model that evaluated every option on the board and chose the best one. Deep Blue did not involving machine learning, the process of a machine getting better through experience. Even so, this feat was eye-opening and remarkably startling to the whole world. The simple notion that a computer program was able to defeat one of the world’s greatest minds at what he does best was never before thought to be possible. The Deep Blue victory also restored excitement to the potential of AI, sparking a revolution of even more AI projects.

IBM Watson: IBM continued to take the lead in the development of AI, and its next biggest stride was the completion of IBM Watson. This time, IBM moved away from chess and turned to a larger challenge: Jeopardy. For machines, Jeopardy is a fundamentally harder game to conquer. In chess, there are always a certain amount of moves that can be made in any position on the board. On the other hand, in Jeopardy, it is uncertain what question will be asked next; there are infinite possibilities to what the host may ask. To solve this issue, IBM made a major breakthrough in natural language processing (NLP), so that a machine could understand a question, break it down to its essential components, and use that breakdown to search for an answer. It was also an early use of machine learning, in which Watson learned from and stored information from over 200 million different pages into its memory. With such a large body of data stored within Watson, and with the ability to refine human speech into a format that could be accurately searchable. Ultimately, Watson competed against Ken Jennings and Brad Rutter, two of the show’s best players in 2008, and handily beat both (Courtesy of TED). It was yet another achievement that showed people how close machines were becoming to humans. The result has also encouraged the continued research and development into NLP, which is now used in our daily lives in the form of Siri, Alexa, and many more.

“I for one welcome our new computer overlords,” — Ken Jennings